This is an old revision of the document!

Table of Contents

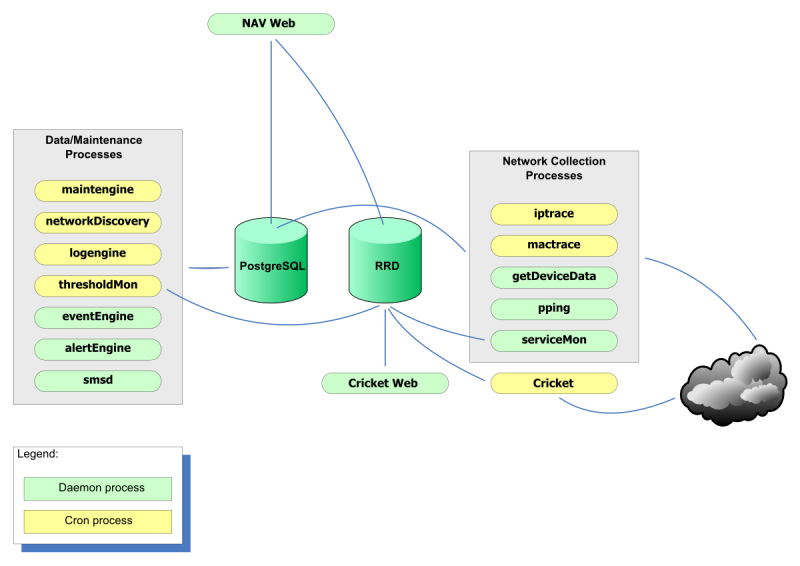

Back-end processes in NAV

NAV has a number of back-end processes. This document gives an overview, listing key information and detailed description for each process. We also give references to documentation found elsewhere on metaNAV.

The following figure complements this document (the NAV 3.3 snmptrapd is not included in the figure):

nav list / nav status

nav list lists all back-end processes. nav status tells you if they are running as they should.

With reference to the nav list, jump directly to the relevant section in this document:

nav list gives you this list:

- cricket (includes makecricketConfig, Cricket collector and cleanrrds)

- networkDiscovery (physical and vlan discovery)

Building the network model

getDeviceData

Key information

| Process name | getDeviceData | |

|---|---|---|

| Alias | gDD / the snmp data collector | |

| Polls network | Yes | |

| Brief description | Collects SNMP data from equipment in the netbox table and stores data regarding the equipment in a number of tables. Does not build topology. | |

| Depends upon | Seed data must be filled in the netbox table, either by the Edit Database tool or by the autodiscovery contrib | |

| Updates tables | netbox, netboxsnmpoid, netboxinfo, device, module, gwport, gwportprefix, prefix, vlan, swport, swportallowedvlan, netbox_vtpvlan | |

| Run mode | Daemon process. Thread based. | |

| Default scheduling | Initial data collection for new netboxes is done every 5 minutes. Update polls on existing netboxes is done every 6 hrs. Collection of certain OIDs for the netbox may deviate from this interval; i.e. the moduleMon OID is polled every hour. | |

| Config file | getDeviceData.conf | |

| Log files | getDeviceData.log og getDeviceData/getDeviceData-stderr.log | |

| Programming language | Java | |

| Lines of code | Approx 8200 | |

| Further doc | tigaNAV report chapter 5 | |

Details

- Initial OID classification

When gDD detects a new box that has a valid snmp read community (regardless of category), he will start initial OID classifiation. This is done by testing the netbox against all OIDs in the snmpoid table and in turn populating the netboxsnmpoid table. Testing is done based on attributes in snmpoid table, see reference to further doc for details. Frequency will be set based on the snmpoid.defaultfreq.

- Plugin-based architecture

gDD has a plugin based architecture. Plugins fall into two types; device plugins and data plugins:- Device plugins collects data with SNMP. Each device plugin is geared towards a particular type of equipment, supporting a particular subset of OIDs. See further doc for details.

- Data plugins updates NAVdb with data fed from the device plugins. A particular data plugin is responsible for a particular table (or set of tables) in the database. See further doc for details.

- Module monitor

The module monitor is a data plugin within gDD. It has the dedicated function of detecting outage of modules in operating netboxes. When a module is detected down a moduleDown event is posted on the event queue (eventq).

iptrace

Key information

| Process name | iptrace |

|---|---|

| Alias | IP-to-mac collector / arplogger |

| Polls network | Yes |

| Brief description | Collects arp data from routers and stores this information in the arp table. |

| Depends upon | The routers (GW / GSW) must be in the netbox table. To assign prefixes to arp entries, getDeviceData must have done router data collection. |

| Updates tables | arp |

| Run mode | cron |

| Default scheduling | every 30 minutes (0,30 * * * *). No threads |

| Config file | pping.conf |

| Log file | pping.log |

| Programming language | Perl |

| Lines of code | Approx 130 lines |

| Further doc | NAVMe report ch 4.5.8 (Norwegian) |

Details

- iptrace understands proxy arp and will not store arp entries that are “false”.

- iptrace ignores routers that are known to be down (fixed in NAV 3.1).

- The command line tool navclean.py offers a means of deleting old arp (and cam) entries.

mactrace

Key information

| Process name | mactrace |

|---|---|

| Alias | Mac-to-switch port collector / getBoksMacs / cam logger |

| Polls network | Yes |

| Brief description | Collects mac addresses behind switch table data for all switches (cat GSW, SW, EDGE). The process also checks for spanning tree blocked ports. |

| Depends upon | getDeviceData must have created the swport tables for the switches. |

| Updates tables | cam (mac adresses), netboxinfo (CDP neighbors), swp_netbox (the candidate list for the physical topology builder), swportblocked (switch ports that are blocked by spannning for a given vlan). |

| Run mode | cron |

| Default scheduling | every 15 minutes ( 11,26,41,56 * * * * ). 24 threads as default |

| Config file | getBoksMacs.conf |

| Log file | getBoksMacs.log |

| Programming language | Java |

| Lines of code | Approx 1400 |

| Further doc | NAVMore report ch 2.1 (Norwegian), tigaNAV report ch 5.4.5 and ch 5.5.3 |

Details

- cam data is not collected from ports that are connecting to other switches (as determined by the physical topology builder). Typically the cam records are stored initually before the topology is built. When topology information is in place the end time in the relevant cam records are set.

- The command line tool navclean.py offers a means of deleting old cam (and arp) entries.

The algorithm

CAM-loggeren kjører hvert kvarter. Det som skjer da er at alle svitsjer (SW og KANT) blir hentet fra databasen og lagt i en kø. Så startes et antall tråder (24 er default) som alle jobber mot denne køen. Hver tråd sjekker om det ligger en boks i køen, og hvis så er tilfellet hentes denne ut og tråden foretar spørring med SNMP. Når dette er ferdig sjekker den køen igjen. Dersom køen er tom avslutter tråden. Alle trådene vil altså hente bokser fra køen helt til denne er tom, og da har alle resterende bokser en tråd som jobber mot dem. Dette sikrer at alle trådene hele tiden har arbeid å gjøre selv om noen bokser tar mye lenger tid å hente data fra enn andre.

–

The cam logger, responsible for the collection of MAC addresses and CDP data, has been updated to make use of the OID database. This has greatly simplified its internal structure as all devices are now treated in a uniform manner; the immediate benefit is that data collection is no longer dependent on type information and no updates should be necessary to support new types. Upgrades in the field can happen without the need for additional updates to the NAV software.

–

The cam logger collects the bridge tables of all switches, saving the MAC entries in the cam table of the NAVdb. Additionally, it collects CDP data from all switches and routers supporting this feature; the result is saved in the swp_netbox table for use by the network topology discover system.

While its basic operation remains the same, it has been rewritten to take advantage of the OID database; the internal data collection framework has been unified and all devices are treated in the same manner. Thus, data collections are no longer based on type information and a standard set of OIDs are used for all devices. When a new type is added to NAV the cam logging should “just work”, which is a major design goal of NAV v3.

One notable improvement is the addition of the interface field in the swport table. It is used for matching the CDP remote interface, and makes this matching much more reliable. Also, both the cam and the swp_netbox tables now use netboxid and ifindex to uniquely identify a swport port instead of the old netboxid, module, port-triple. This has significantly simplified swport port matching, and especially since the old module field of swport was a shortened version of what is today the interface field, reliability has increased as well. -

networkDiscovery_topology

Key information

| Process name | networkDiscovery.sh topology |

|---|---|

| Alias | Physical Topology Builder |

| Polls network | No |

| Brief description | Builds the physical topology of the network; i.e. which netbox is connected to which netbox. |

| Depends upon | mactrace fills data in swp_netbox representing the candidate list of physical neighborship. This is the data that the physical topology builder uses. |

| Updates tables | Sets the to_netboxid and to_swportid fields in the swport and gwport tables. |

| Run mode | cron |

| Default scheduling | every hour (35 * * * *) |

| Config file | None |

| Log file | networkDiscovery/networkDiscovery-topology.html og networkDiscovery/networkDiscovery-stderr.log |

| Programming language | Java |

| Lines of code | Approx 1500 (shared with vlan topology builder) |

| Further doc | tigaNAV report ch 5.5.4 |

Details

The network topology discovery system automatically discovers the physical topology of the network monitored by NAV based on the data in the swp_netbox table collected by the cam logger. No major updates have been necessary except for adjustment to the new structure of the NAVdb; the basic algorithm remains the same. While the implementation of said algorithm is somewhat complicated as to gracefully handle missing data, the following is a simplified description:

- We start with a candidate list for each swport port. These are the switches located behind a switch port and the goal of the algorithm is to pick the one to which it is connected directly. Some of the candidate lists, those of the switches one level up from the edge, will contain only one candidate. We can thus pick this as the switch directly connected and proceed to remove said switches from all other lists. After this removal there will be more candidate lists with only one candidate, and we can apply the same procedure again.

- If we have the complete information about the network we could now simply iterate until all candidate lists were empty; however, to deal with missing information we sometimes have to make an educated guess of which is the directly connected switch. The network topology discover system makes the guess by looking at how far each candidate is from the router and how many switches are connected below them, and then try to pick the one which most closely matches the current switch.

In practice the use of CDP makes this process very reliable for the devices supporting it, and this makes it easier to correctly determine the remaining topology even in the case of missing information.

networkDiscovery_vlan

Key information

| Process name | networkDiscovery.sh vlan |

|---|---|

| Alias | Vlan Topology Builder |

| Polls network | No |

| Brief description | Builds the per vlan topology on the swithed network with interconnected trunks. The algorithm is a top-down depth-first traversel starting at the primary router port for the vlan. |

| Depends upon | The physical topology need to be in place, this process therefore supersedes the physical topology builder. |

| Updates tables | swportvlan |

| Run mode | cron |

| Default scheduling | every hour (38 * * * *) |

| Config file | None |

| Log file | networkDiscovery/networkDiscovery-vlan.html og networkDiscovery/networkDiscovery-stderr.log |

| Programming language | Java |

| Lines of code | See the physical topology builder above |

| Further doc | tigaNAV report ch 5.5.5 |

Details

After the physical topology of the network has been mapped by the network topology discover system it still remains to explore the logical topology, or the VLANs. Since modern switches support trunking, which can transport several independent VLANs over a single physical link, the logical topology can be non-trivial and indeed, in practice it usually is.

The vlan discovery system uses a simple top-down depth-first graph traversal algorithm to discover which VLANs are actually running on the different trunks and in which direction. Direction is here defined relative to the router port, which is the top of the tree, currently owning the lowest gateway IP or the virtual IP in the case of HSRP. In addition, since NAV v3 now fully supports the reuse of VLAN numbers, the vlan discovery system will also make the connection from VLAN number to actual vlan as defined in the vlan table for all non-trunk ports it encounters.

A special case are closed VLANs which do not have a gateway IP; the vlan discovery system will still traverse these VLANs without setting any direction and also creating a new VLAN record in the vlan table. The NAV administrator can fill inn descriptive information afterward if desired.

The implementation of this subsystem is again complicated by factors such as the need for checking at both ends of a trunk if the VLAN is allowed to traverse it, the fact that VLAN numbers on each end of non-trunk links need not match (the number closer to the top of the tree should then be given precedence and the lower VLAN numbers rewritten to match), that both trunks and non-trunks can be blocked (again at either end) by the spanning tree protocol and of course that it needs to be highly efficient and scalable in the case of large networks with thousands of switches and tens of thousands of switch ports.

Monitoring the network

pping

Key information

| Process name | pping |

|---|---|

| Alias | The status monitor / parallel pinger |

| Polls network | Yes |

| Brief description | Pings all boxes in the netbox table. Works effectively in parallel, being able to ping a large number of boxes. Has configurable robustnes criteria for defining when a box actually is down. |

| Depends upon | Netboxes to be in the netbox table. |

| Updates tables | Posts events on the eventq table. Sets the netbox.up value in addition. |

| Run mode | daemon |

| Default scheduling | Pings all hosts every 20 second. Waits maximum 5 second for an answer. After 4 “no-answers” the box is declared down as seen from pping. |

| Config file | pping.conf |

| Log file | pping.log |

| Programming language | Python |

| Lines of code | Approx 4200, shared with servicemon |

| Further doc | See below, based on and translated from NAVMore report ch 3.4 (Norwegian) |

Details

pping is a daemon with its own (configurable) scheduling. pping works in parallel which makes each ping sweep very efficient. The frequency of each ping sweep is per default 20 seconds. The maximum allowed response time for a host is 5 seconds (per default). A host is declared down on the event queue after four consecutive “no responses” (also configurable). This means that it takes between 80 and 99 seconds from a host is down till pping declares it as down.

Please note the event engine will have a grace period of one minute (configurable) before a “box down warning” is posted on the alert queue, and another three minutes before the box is declared down (also configurable). In summery expect 5-6 minutes before a host is declared down.

The configuration file pping.conf lets you adjust the following:

| parameter | description | default |

|---|---|---|

| user | the user that runs the service | navcron |

| packet size | size of the icmp packet | 64 byte |

| check interval | how often you want to run a ping sweep | 20 seconds |

| timeout | seconds to wait for reply after last ping request is sent | 5 seconds |

| nrping | number of requests without answer before marking the device as unavailable | 4 |

| delay | ms between each ping request | 2 ms |

In addition you can configure debug level, location of log file and location of pid file.

Note: In order to uniquely identify the icmp echo response packets pping needs to tailor make the packets with its own signature. This delays the overall throughput a bit, but pping can still manage 90-100 hosts per second, which should be sufficient for most needs.

Algorithm - one ping sweep

pping has three threads:

1. Thread 1 generates and sends out the icmp packets.

2. Thread 2 receives echo replies, checks the signature and stores the result to RRD.

3. The main thread does the main scheduling and reports to the event queue.

Thread 1 works this way:

FOR every host DO:

1. Generate an icmp echo packet with: (destination IP, timestamp, signature)

2. Send the icmp echo.

3. Add host to the "Waiting for response" queue.

4. Sleep in the configured ''delay'' ms (default 2 ms). This delay will spread out the response times, which in

turn reduces the receive thread queue and will in effect make the measured response time more accurate.

Thread 2 works this way:

As long as thread 1 is operating and as long as we have hosts in the "Waiting for response" queue, with a

timout of 5 seconds (configurable):

1. Check if we have received packets

2. Get the data (the icmp reply packet)

3. Verify that the packet is to our pid.

4. Split the packet in (destination IP, timestamp, signature)

If IP is wrong or signature is wrong, discard.

5. If we recognize the IP address on the "Waiting for response" queue, update response time for the host and

remove the host from the "Waiting for response" queue.

When thread 2 finishes the sweep is over. If hosts are remaining on the "Waiting for response" queue, we set

response time to "None" and increments the "number of consecute no-reply" counter for the host.

When thread 3 detects that a host has to many no-replies a down event is posted on the event queue.

Note that the response times are recorded to RRD which gives us response time and packet loss data as an extra bonus.

servicemon

Key information

| Process name | servicemon |

|---|---|

| Alias | The service monitor |

| Polls network | Yes |

| Brief description | Monitors services on netboxes. Uses implemented handlers to deal with a growing number of services; currently supporting ssh, http, imap, pop3, smtp, samba, rpc, dns and dc. |

| Depends upon | The service and serviceproperty tables must have data. This is filled in by Edit Database when the NAV administrator registers services that he wants to monitor. |

| Updates tables | Posts servicemon events on the eventq table. |

| Run mode | daemon |

| Default scheduling | Checks each service every 60 second. Has varying timouts for different services, between 5 and 10 seconds. If a service does not respond three times in a row, servicemon declares it down. |

| Config file | servicemon.conf |

| Log file | servicemon.log |

| Programming language | Python |

| Lines of code | See pping above, shared code base |

| Further doc | NAVMore report ch 3.5 (Norwegian) |

Details

- see the NAVMore report as referenced for details

thresholdMon

Key information

| Process name | thresholdMon |

|---|---|

| Alias | The threshold monitor |

| Polls network | No |

| Brief description | At run-time, it fetches all the thresholds in the RRD database and compares them to the datasource in the corresponding RRD file. If the threshold has been exceeded, it sends an event containing relevant information. The default threshold value is 90% of maximum. |

| Depends upon | The RRD database has to be filled with data. This is done by makeCricketconfig. In addition you must manually run a command line tool, fillthresholds.py, for setting the threshold to a configured level. A more advanced solution for setting different threshold is under development. |

| Updates tables | eventq with thresholdmon events |

| Run mode | cron |

| Default scheduling | every 5 minutes ( */5 * * * * ) |

| Config file | fillthresholds.conf |

| Log file | thresholdMon.log |

| Programming language | Python |

| Lines of code | Approx 400 |

| Further doc | See ThresholdMonitor |

Details

- See ThresholdMonitor

moduleMon

Key information

| Process name | getDeviceData data plugin moduleMon |

|---|---|

| Alias | The module monitor |

| Polls network | Yes |

| Brief description | A plugin to gDD. A dedicated OID is polled. If this is a HP switch, a specific HP OID is used (oidkey hpStackStatsMemberOperStatus), similarly for 3Com (oidkey 3cIfMauType). For other equipment the genereric moduleMon OID is used. For 3com and HP the OID actually tells us if a module is down or not. For the generic test we (in lack of something better) check if an arbitrary ifindex on the module in question responds. If the module has no ports, no check is done. |

| Depends upon | The switch or router to be processed by gDD with apropriate data in module and gwport/swport. |

| Updates tables | posts moduleMon events on the eventq. Sets in addition the boolean module.up value. |

| Run mode | daemon, a part of gDD. |

| Default scheduling | Depends on the defaultfreq of the moduleMon OID (equivalently for the HP and 3com OIDs) Defaults to 1 hour. |

| Config file | see gDD |

| Log file | see gDD |

| Programming language | Java |

| Lines of code | Part of gDD, see gDD. |

| Further doc | Not much. |

The event and alert system

Also see the event- and alert system page.

eventEngine

Key information

| Process name | eventEngine |

|---|---|

| Alias | The event engine |

| Polls network | No |

| Brief description | The event engine processes events on the event queue and posts alerts on the alert queue. Event engine has a mechanism to correlate events; i.e. if the ppinger posts up events right after down events, this will not be sent as boxDown alerts, only boxDown warnings. Further if a number of boxDown events are seen, event engine looks at topology and reports boxShadow events for boxes in shadow of the box being the root cause. |

| Depends upon | The various monitors need to post events no event queue (with target event engine) in order for event engine to have work. alertmsg.conf needs to be filled in for all events, messages on alertqmsg (and alerthistmsg) are formatted accordingly. |

| Updates tables | Deletes records from eventq as they are processed. Posts records on alertq with adhering alertqvar and alertqmsg, similarly alerthist with adhering alerthistvar and alerthistmsg. |

| Run mode | daemon |

| Default scheduling | Event engine checks the eventq every ??? seconds. boxDown-warning-wait-time and boxDown-wait-time are configurable values. Parameters for module events are also configurable. Servicemon eventes are currently not; a solution is looked upon. |

| Config file | eventEngine.conf |

| Log file | eventEngine.log |

| Programming language | Java |

| Lines of code | Approx 3000 lines |

| Further doc | NAVMore report ch 3.6 (Norwegian). Updates in tigaNAV report ch 4.3.1. |

Details

- eventEngine is plugin based with two classes of plugins; one for eventtypes and one for physical devices. Further details in the referenced documentation.

maintengine

Key information

| Process name | maintengine |

|---|---|

| Alias | The maintenance engine |

| Polls network | No |

| Brief description | Checks the defined maintenance schedules. If start or end of a maintenance period occurs at this run time, the relevant maintenanceEvents are posted on the eventq, one for each netbox and/or service in question. |

| Depends upon | NAV users must set up maintenance schedule which in turn is stored in the maintenance tables (maint_task, maint_component). |

| Updates tables | Posts maintenance events on the eventq. Also updates the maint_task.state. |

| Run mode | cron |

| Default scheduling | Every 5 minutes ( */5 * * * * ) |

| Config file | None |

| Log file | maintengine.log |

| Programming language | Python |

| Lines of code | Approx 300 |

| Further doc | Old doc: tigaNAV report ch 8. The maintenance system was rewritten for NAV 3.1. See here for more. |

Details

- See referenced doc.

alertEngine

Key information

| Process name | alertEngine |

|---|---|

| Alias | The alert engine |

| Polls network | No |

| Brief description | Alert engine processes alerts on the alert queue and checks whether any users have subscribed to the alert in their active user profile. If so, alert engine sends the alert to the user, either as email or sms, depending on the profile. Alert Engine sends email itself, whereas sms messages are inserted on the sms queue for the sms daemon to manage. If a user has selected queueing email messages, alert engine uses the alertprofiles.queue table. |

| Depends upon | eventEngine must be running and do the alertq posting. NAV users must have set up their profiles, if their are no matches here, alertq will simply delete the alerts. |

| Updates tables | Deletes records from alertq with adhering alertqvar and alertqmsg. Inserts records on alertprofiles.smsq. User profiles that requires queued email messages, the alertprofile.queue table is used. |

| Run mode | daemon |

| Default scheduling | Checks for new alerts every 60 seconds per default. |

| Config file | alertengine.cfg |

| Log file | alertengine.log og alertengine.err.log |

| Programming language | perl |

| Lines of code | Approx 1900 |

| Further doc | NAVMore report ch 3.7 and 3.8 (Norwegian). |

Details

- See the referenced doc.

smsd

Key information

| Process name | smsd |

|---|---|

| Alias | The SMS daemon |

| Polls network | No |

| Brief description | Checks the navprofiles.smsq table for new messages, formats the messages into one SMS and dispatches it via one or more dispatchers with a general interface. Support for multiple dispatchers are handled by a dispatcher handler layer. |

| Depends upon | alertEngine fills the navprofiles.smsq table |

| Updates tables | Updates the sent and timesent values of navprofiles.smsq |

| Run mode | Daemon process |

| Default scheduling | Polls the sms queue every x minutes |

| Config file | smsd.conf |

| Log file | smsd.log |

| Programming language | Python (Perl in 3.1) |

| Lines of code | Approx 1200 |

| Further doc | subsystem/smsd/README in the NAV sources describes the available dispatchers and more |

Details

Usage

As described when given the -–help argument:

Usage: smsd [-h] [-c] [-d sec] [-t phone no.]

-h, --help Show this help text

-c, --cancel Cancel (mark as ignored) all unsent messages

-d, --delay Set delay (in seconds) between queue checks

-t, --test Send a test message to <phone no.>

Especially note the --test option, which is useful for debugging when experiencing problems with smsd.

Configuration

The configuration file smsd.conf lets you configure the following:

| parameter | description | default |

|---|---|---|

| username | System user the process should try to run as | navcron |

| delay | Delay in seconds between queue runs | 30 |

| autocancel | Automatically cancel all messages older than 'autocancel', 0 to disable. Format like the PostgreSQL interval type, e.g. '1 day 12 hours'. | 0 |

| loglevel | Filter level for log messages. Valid options are DEBUG, INFO, WARNING, ERROR, CRITICAL | INFO |

| mailwarnlevel | Filter level for log messages sent by mail. | ERROR |

| mailserver | Mail server to send log messages via. | localhost |

| dispatcherretry | Time, in seconds, before a dispatcher is retried after a failure | 300 |

| dispatcherN | Dispatchers in prioritized order. Cheapest first, safest last. N should be 1,2,3,… | dispatcher1 defaults to GammuDispatcher |

In addition, some dispatchers need extra configuration as described in comments in the config file.

The snmptrapd

Key information

| Process name | snmptrapd |

|---|---|

| Alias | The SNMP trap daemon |

| Polls network | No |

| Brief description | Listens to port 162 for incoming traps. When the snmptrapd receives a trap it puts all the information in a trap-object and sends the object to every traphandler stated in the “traphandlers” option in snmptrapd.conf. It is then up to the traphandler to decide if it wants to process the trap or just discard it. |

| Depends upon | |

| Updates tables | Depends on “traphandlers”. Posts on eventq would be typical |

| Run mode | Daemon process |

| Default scheduling | |

| Config file | snmptrapd.conf |

| Log file | snmptrapd.log and snmptraps.log |

| Programming language | Python |

| Lines of code | Approx 200 + traphandlers |

| Further doc | - |

Collecting statistics

makecricketConfig

Key information

| Process name | makecricketconfig |

|---|---|

| Alias | The Cricket configuration builder |

| Polls network | No |

| Brief description | |

| Depends upon | That gDD has filled the gwport, swport tables (and more…) |

| Updates tables | The RRD database (rrd_file and rrd_datasource) |

| Run mode | cron |

| Default scheduling | Every night( 12 5 * * * ) |

| Config file | None |

| Log file | cricket-changelog |

| Programming language | perl |

| Lines of code | Approx 1600 |

| Further doc | How to configure Cricket addons in NAV v3 |

Details

The Cricket collector (not NAV)

Key information

| Process name | collect-subtrees |

|---|---|

| Alias | cricket collector (not NAV) |

| Polls network | Yes |

| Brief description | Polls routers and switches for counters as configured in the cricket configuration tree. |

| Depends upon | makecricketconfig to build the configuration tree |

| Updates tables | Updates RRD files |

| Run mode | cron |

| Default scheduling | Every 5 minute (Pre-NAV 3.2 had a one minute run mode for gigatit ports. As of NAV 3.2 64 bits counters are used and the 5 minutes run mode is used for all counters). |

| Config files | directory tree under cricket-config/ |

| Log file | cricket/giga.log og cricket/normal.log |

| Programming language | not relevant |

| Lines of code | not relevant |

| Further doc | not relevant |

cleanrrds

Key information

| Process name | cleanrrds |

|---|---|

| Alias | RRD cleanup script |

| Polls network | No |

| Brief description | This script finds all the rrd-files that we are using. The purpose of the script is to delete all the rrd-files that are no longer active, to save disk-space. |

| Depends upon | - |

| Updates tables | - |

| Run mode | cron |

| Default scheduling | nightly ( 0 5 * * * ) |

| Config file | ? |

| Log file | ? |

| Programming language | Perl |

| Lines of code | Approx 200 |

| Further doc | - |

Details

- if a rrd-file is found and is in the RRD database, leave it alone.

- if a rrd-file is found that is not in the database, check the last update-time. If the time for last update is less than 2 weeks ago, leave it alone. Otherwise delete the file.

Other systems

logengine

Key information

| Process name | logengine |

|---|---|

| Alias | The Cisco syslog analyzer |

| Polls network | No |

| Brief description | Analyzes cisco syslog messages from switches and routers and inserts them in a structured manner in the logger database. Makes searchein for log messages of a certain severity easy, etc. |

| Depends upon | syslogd to run on the NAV machine. Parses the syslog for cisco syslog messages. |

| Updates tables | The tables in the logger database |

| Run mode | cron |

| Default scheduling | every minute ( * * * * * ) |

| Config file | logger.conf |

| Log file | None |

| Programming language | Python |

| Lines of code | Approx 350 |

| Further doc | NAVMore report ch 2.4 (Norwegian). |

Details

- see referenced doc. Note that this system at one time was intended for NAVs own logs. This idea was aborted at a later stage.

Arnold

Key information

| Process name | |

|---|---|

| Alias | |

| Polls network | |

| Brief description | |

| Depends upon | |

| Updates tables | |

| Run mode | |

| Config file | arnold/arnold.cfg, arnold/noblock.cfg og arnold/mailtemplates/* |

| Log file | arnold/arnold.log |

| Default scheduling | |

| Programming language | |

| Lines of code | |

| Further doc | Arnold |